A.I. - Ollama Unleashed: The DIY LLM Powerhouse on Your Local Machine

Large language models (LLMs) are powerful tools, but running them on your own can be a challenge. Ollama simplifies this process, allowing you to run these advanced models directly on your local machine, without the need for expensive cloud services or specialized hardware. With Ollama, you gain complete control over your AI tools. Customize them to your specific needs and experiment freely without worrying about high costs. Plus, you can break free from reliance on cloud providers.

As developers, we’re constantly faced with important decisions about the tools we use. One major choice is how to deploy those powerful large language models (LLMs). This decision has ripple effects, impacting everything from development speed and efficiency to how we protect our data and manage costs.

There are two main paths we can take. We can choose a local solution like Ollama, which puts us in the driver’s seat with complete control and privacy – a big plus when dealing with sensitive projects. Or, we can go with a cloud-based hosted service, which offers convenience and powerful infrastructure without requiring a ton of resources on our end.

Each path has its pros and cons, and understanding them is key to making the right choice for our specific needs and goals.

If you’re seeking a more cost-effective and flexible way to work with LLMs, Ollama is worth exploring. It provides an accessible way to harness the power of these models for your projects.

Getting to Know Ollama: It’s Not Just Another LLM Tool

Ollama isn’t just another AI tool; it’s a playground for language model enthusiasts, offering a diverse collection of models like the lightning-fast Llama3 and the contextually savvy Mistral. This is a game-changer for developers and businesses who want to handpick the perfect model for their unique needs.

Why Ollama? It’s Your AI Powerhouse:

-

Your Model, Your Choice: Need a quick and adaptable model like Llama3? Or perhaps you’re after a model that understands the nuances of context like Mistral? Ollama’s got you covered, offering a variety of models to suit every project. It’s like having a wardrobe full of outfits, each perfect for a different occasion.

-

Customizable AI: Ollama doesn’t believe in one-size-fits-all solutions. It empowers you to tailor your chosen model to your project’s specific requirements. It’s like having a personal stylist who helps you create the perfect look for your AI.

-

Privacy First: With Ollama, your data stays safe and secure on your own machine. No need to send sensitive information to the cloud. It’s like having your own private, high-security vault for your data.

Ollama in the Real World: A Few Examples

-

Data Guardians: For industries like healthcare and finance where privacy is paramount, Ollama allows running models like Mistral locally, ensuring sensitive data never leaves your premises. It’s like having a top-notch security system protecting your most valuable assets.

-

Personalized Solutions: Have a unique business need? Ollama and models like Llama3 empower you to build custom applications tailored to your exact requirements. It’s like having a team of expert craftsmen creating a masterpiece just for you.

Ollama isn’t just a platform; it’s your key to unlocking the full potential of AI. With Ollama, you’re not just using AI, you’re shaping it to fit your vision.

Ollama or Hosted LLMs: Which One’s Right for You?

| Feature | Ollama | Hosted Solutions |

|---|---|---|

| Control | You’re the boss – total control over your data and how things work. | Limited control – you’re relying on the provider’s rules. |

| Privacy | Your data stays safe and sound with you. | Privacy might be an issue, depending on who you’re using. |

| Cost | No surprise bills here | Expect ongoing fees based on how much you use it. |

| Customization | Tweak and tailor to your heart’s content. | Customization options are usually pretty limited. |

| Offline Access | Works even when you’re off the grid. | You’ll need an internet connection to use it. |

| Setup | It takes a bit of tech know-how to get started. | Getting started is a breeze, but so is hitting limits. |

| Resource Usage | Depends on what you’ve got on your machine. | Won’t bog down your computer, but can get pricey with heavy use. |

Ollama shines when you need to tinker with sensitive data, fine-tune models to perfection, or build apps that work offline.

So, what’s the takeaway? The choice between Ollama and cloud-based hosted LLMs isn’t just about the tech – it’s about what matters most to your project. Is it control over your environment? Keeping costs low? Or maybe privacy is your top priority? Understanding the differences between these two options can help you make a decision that’s right for you. Let’s dive deeper into these features to see how they play out in real-world development scenarios.

Getting Ollama Up and Running: A Quick Start Guide

Whether you’re a Windows, macOS, or Linux user, setting up Ollama is designed to be a breeze. Here’s how to get started on your preferred operating system:

Installation and Setup

Windows:

- Download the Installer: Head to the Ollama download page and grab the Windows installer file.

- Click and Install: Double-click the downloaded file and follow the prompts. It’s pretty straightforward!

macOS:

- Download the App: Swing by the Ollama download page and get the file for macOS.

- Drag and Drop: Open the downloaded file, and simply drag the Ollama app icon into your Applications folder. You’re good to go!

Linux:

- Terminal Time: Open your terminal and paste in this handy command:

This will automatically download and install Ollama for you.

curl -fsSL https://ollama.com/install.sh | sh

Now that you’ve got Ollama installed, you’re ready to start exploring and customizing your language models!

After Installation: Your First Steps with Ollama

Once Ollama is set up, you need to start the app before you can use it. Here’s what you do:

-

Launch Ollama:

- Windows/macOS: Just open the Ollama app from your desktop or Applications folder.

- Linux: Pop open your terminal and run

ollama serve. This starts the app behind the scenes.

-

Open Your Terminal: Now you can use the Ollama commands to get things done.

Here are some handy commands to get you started:

ollama --help: This is your cheat sheet. It lists all the commands you can use and how to use them.ollama serve: Starts the Ollama app (if you haven’t already done so on Linux).ollama list: Shows you all the models you’ve already downloaded and installed.ollama pull [model_name]: Grabs a model from the internet, but doesn’t run it yet.ollama run [model_name]: Downloads a model (if you haven’t already) and starts it up so you can interact with it.ollama rm [model_name]: Gets rid of a model you don’t need anymore.

Each of these commands has a specific purpose, whether it’s managing your models or actually using them. By mastering these commands, you’ll be able to take full advantage of Ollama, whether you’re building offline apps, working with sensitive data, or just having fun experimenting with different language models.

Level Up Your Ollama Experience with OpenWebUI

If you’re used to user-friendly interfaces like ChatGPT, the switch to command line interfaces (CLI) might feel like a step back in time. That’s where OpenWebUI comes in. It teams up with Ollama to give you a sleek graphical interface, making it a breeze to work with your local language models.

Why OpenWebUI is a Game-Changer

OpenWebUI is designed to make using Ollama not only easier, but more efficient and fun. It takes those sometimes-confusing CLI commands and transforms them into a simple, easy-to-navigate interface. So, whether you’re a coding whiz or just starting out, you can manage and experiment with your models like a pro.

Get Up and Running with OpenWebUI

Setting up OpenWebUI with Ollama is a snap, so you can get back to what you do best – building awesome things with LLMs:

-

Install OpenWebUI: The easiest way to get started is by using Docker. Follow the official OpenWebUI documentation for detailed Docker installation instructions.

-

Connect OpenWebUI to Ollama: Open the app, head to the settings menu, and enter the API keys or configuration details that link it to your Ollama setup. You can usually find these keys during Ollama’s installation or in your Ollama configuration file.

The Perks of Using OpenWebUI

-

Model Management Made Easy: Get a clear overview of your models, their status, and important metrics right on your dashboard. No more digging through endless lines of code to start, stop, or manage your models.

-

Interact with Your Models: Craft and test prompts in real time, just like you would with ChatGPT. This makes experimenting and refining your interactions a breeze.

-

Developer-Friendly Features: Drag-and-drop model training, live performance graphs, and detailed logs help you understand and improve your model’s efficiency.

-

Accessible to Everyone: Whether you’re a coding guru or a newcomer, OpenWebUI makes powerful language models accessible to everyone.

-

Collaboration Made Simple: Easily share your model setup and results with colleagues or collaborators through the intuitive interface.

By combining OpenWebUI with Ollama, you’re not just adding functionality, you’re supercharging your entire LLM experience. This dynamic duo gives you the control and privacy of a local setup with the ease and convenience of a cloud-based tool, like ChatGPT. It’s the perfect solution for anyone who wants to harness the power of language models without sacrificing user-friendliness.

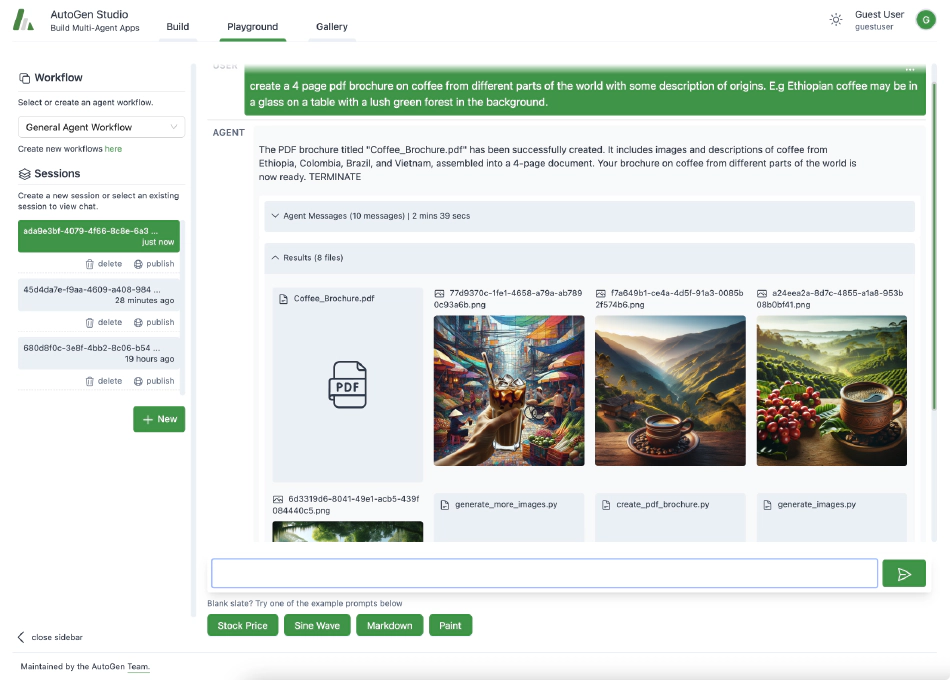

Step into the Future of Software Development with AutoGen

Get ready to enter the control room of the future of software development, where the concept of a ’team’ takes on a whole new meaning. Meet AutoGen, a tool that allows you to orchestrate an entire symphony of intelligent agents, each playing a crucial role in the software development lifecycle.

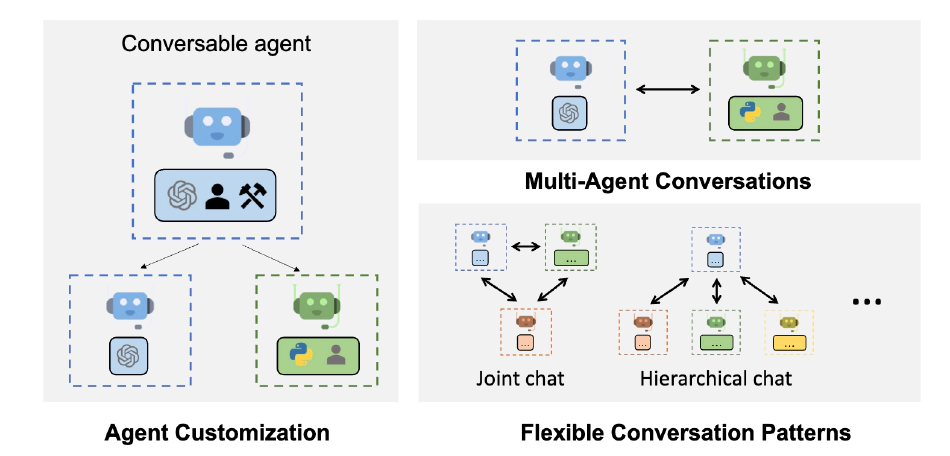

What is AutoGen?

Think of AutoGen as your very own virtual development agency, all wrapped up in one powerful tool. It harnesses the power of large language models to simulate a team of software development professionals. But this isn’t just about automating tasks; it’s about creating a dynamic, interactive environment where each agent plays a pivotal role in the development process.

A Day in the Life with AutoGen and Ollama

Let’s say you’re starting a new project to build an app. Instead of assembling a team, you launch Ollama and AutoGen. Your virtual team comes to life:

- The AI Project Manager: This agent lays the groundwork by outlining the project scope and requirements, much like a director setting the vision for a film.

- The AI Developer: Next up is the AI developer, who takes those requirements and begins coding. Think of them as the screenwriter, transforming an idea into a working script.

- The AI QA Tester: Now it’s the QA tester’s turn to scrutinize the code, identifying any bugs or issues. They’re like the editor, meticulously refining the script to perfection.

- The AI Marketer: Finally, the AI marketer steps in to make sure the app is ready for its audience, crafting messages and strategies like a publicist promoting a movie.

The magic of AutoGen is that these agents don’t just work in isolation; they actively communicate and collaborate. It’s like watching a group of skilled improvisers, building off each other’s ideas and refining their performance in real time. The project manager might adjust the scope based on feedback from the developer, who in turn might modify code based on the tester’s findings. This constant feedback loop ensures continuous improvement and a polished final product.

Using AutoGen with Ollama isn’t just about building software; it’s about experiencing a revolutionary approach to creation, where the journey is as exciting as the destination. So, get ready to lead a team of AI-powered experts, each ready to play their part in turning your vision into reality. This is the future of software development, where every project is a masterpiece in the making.

Advanced Ollama Techniques

Let’s level up your Ollama experience! Whether you’re looking to squeeze every bit of performance out of it or integrate it smoothly into your existing setup, mastering these advanced techniques will make you feel like a tech wizard. Here’s how you can turn Ollama from merely powerful to exceptionally dynamic and super-efficient.

Fine-Tuning

Picture Ollama as a talented artist ready to adapt to your favorite art style. Fine-tuning is essentially teaching it to perfect its craft according to your specific needs. By using specialized training datasets that resonate with your industry or task, you can customize Ollama to think and respond precisely as you would want. This is perfect for creating highly specialized applications, whether that’s a finance-savvy bot, a medical assistant that understands clinical terms, or a customer support hero that knows your products inside out.

Optimization

Think of Ollama as a high-end sports car. It’s already fast, but with a few tweaks, it could win races. Optimizing your hardware setup, like adding a better GPU or managing memory more effectively, can supercharge Ollama’s performance. Furthermore, model quantization is like fine-tuning the engine to get the most speed out of every drop of fuel—this means making Ollama faster and more responsive while consuming fewer resources. These steps ensure that your Ollama setup isn’t just running; it’s soaring.

Integration with Other Tools

Integrating Ollama into your current toolkit is like adding a turbo button to your favorite software. With APIs and IDE plugins, Ollama can blend seamlessly into the development tools you already use and love. This means you can call upon Ollama’s power right from your IDE, turning it into a central hub for coding, testing, and deploying AI-driven features. It’s about making your workflow smoother and letting Ollama enhance your productivity without ever getting in the way.

These advanced techniques are not just about using Ollama; they’re about making it an indispensable part of your technological repertoire. With fine-tuning for precision, optimization for speed, and seamless integration, you’ll unlock the true potential of Ollama, making every project faster, smarter, and more efficient. Get ready to push the boundaries of what you thought was possible and turn every project into a showcase of innovation.

Ollama in Action: Real-World Code Examples

Let’s see Ollama’s magic in action with these practical examples. From crafting engaging blog posts to troubleshooting tech issues, discover how Ollama can transform your ideas into reality with just a few lines of code.

Example 1: Effortless Blog Post Creation

Scenario: You’re a travel blogger juggling multiple destinations. Keep your content fresh and engaging with Ollama’s help.

Code Snippet:

from ollama import TextGenerator

generator = TextGenerator(model="travel_guru") # Pick your favorite travel writer model

blog_post = generator.generate(

prompt="Write a captivating blog post about exploring hidden gems in Kyoto during cherry blossom season.",

max_length=800 # Adjust length as needed

)

print(blog_post)

Result: A unique, informative blog post about Kyoto’s hidden treasures, perfect for captivating your audience.

Example 2: Your Friendly AI Support Agent

Scenario: You’re providing customer support for a tech company. Let Ollama handle common technical questions with ease.

Code Snippet:

from ollama import QuestionAnswering

qa_bot = QuestionAnswering(model="tech_support_wiz")

customer_question = "How do I troubleshoot a slow internet connection?"

answer = qa_bot.answer(question=customer_question)

print(answer)

Result: A clear and helpful response to the customer’s question, improving your support team’s efficiency.

Example 3: Documentation Made Easy

Scenario: You’re a developer who needs to create and maintain clear, concise technical documentation. Ollama can lend a hand.

Code Snippet:

from ollama import DocumentationGenerator

doc_generator = DocumentationGenerator(model="code_explainer")

function_code = """

def calculate_discount(price, percentage):

"""Calculates the discount amount and final price."""

discount = price * (percentage / 100)

final_price = price - discount

return discount, final_price

"""

documentation = doc_generator.generate(function_code)

print(documentation)

Result: Well-structured, easy-to-understand documentation for your code, saving you valuable time and effort.

These are just a few examples of what you can achieve with Ollama. Whether it’s generating creative content, providing top-notch customer support, or streamlining technical tasks, Ollama is your versatile AI assistant, ready to supercharge your productivity and creativity.

Embrace the Future of AI with Ollama

Ollama isn’t just another tool in your developer toolkit; it’s a paradigm shift for those who want to harness the power of large language models without relying on the cloud. By bringing LLMs to your local machine, Ollama gives you unprecedented control and flexibility, empowering you to innovate at your own pace and on your own terms.

Whether you’re building groundbreaking applications, enhancing existing projects, or simply exploring the vast possibilities of AI, Ollama provides the platform to bring your ideas to life. As you integrate Ollama into your workflows and share your creations with the world, you’ll be part of a growing community of developers pushing the boundaries of AI and redefining what’s possible across industries.

The potential is limitless, limited only by your imagination. Don’t miss out on the opportunity to revolutionize your development process and problem-solving capabilities in this AI-driven world. Start your Ollama journey today and unlock the full potential of local language models.

FAQs

Answers to the most frequently asked questions.

What sets Ollama apart from cloud-based LLM solutions?

Ollama is your DIY language model toolkit, empowering you to run powerful AI models directly on your machine. This means enhanced privacy, total control, and customization freedom – unlike traditional cloud-based solutions.

Is Ollama the right fit for my AI project?

If you crave complete control over your AI tools, need to safeguard sensitive data, or want to slash those recurring cloud service fees, Ollama could be your perfect match.

I'm ready to dive in! How do I install Ollama?

We've made it easy! The installation process is tailored to your OS. Windows users get a simple executable, macOS users can drag and drop the app, and Linux users can run a handy command line script. Full instructions are on the Ollama website.

Can I use Ollama offline? What are the advantages?

Definitely! Ollama works seamlessly offline. This is a major advantage for security-conscious projects or environments with limited internet access. You get to keep your data private and enjoy uninterrupted development and testing.

What makes Ollama stand out for developers?

Ollama boasts impressive customization capabilities, eliminates those pesky ongoing usage fees, and works offline. Plus, it plays well with your existing tools, making it adaptable to a wide range of development scenarios.

How can I get the most out of Ollama for my development projects?

To unlock Ollama's full potential, dive into advanced techniques like fine-tuning (tailoring models to your specific needs), optimization (for lightning-fast performance), and seamless integration with your favorite development tools.

What's OpenWebUI, and why should I use it with Ollama?

OpenWebUI is a user-friendly graphical interface for Ollama, making it a breeze to manage and interact with your models. If you prefer a visual approach over command lines, OpenWebUI is your ticket to effortless model management and enhanced user interaction.

Can I tailor Ollama for specific industries or tasks?

Absolutely! Ollama's customization superpowers let you fine-tune models for specific industries or tasks. Whether you need a financial expert, a medical assistant, or a language translator, Ollama can be your AI specialist.

I'm new to local LLMs. Will I be able to use Ollama?

Don't worry, we've got your back! Ollama comes with comprehensive documentation, an easy setup process, and a supportive community forum where you can find answers, ask questions, and connect with fellow users.

Where can I find more information and resources about Ollama?

Head over to the Ollama website! You'll find in-depth installation guides, a treasure trove of FAQs, and a blog packed with tutorials and real-world examples to guide you on your Ollama journey.

Share This Post

When Apple launched the Vision Pro, it promised a revolution in spatial computing. But for those of us who love to consume and create media, the limited internal storage quickly became a glaring issue. Enter the WD My Passport Wireless Pro, an unassuming device that has transformed my Vision Pro experience. It’s more than just an external drive; it’s a portable media server, a productivity hub, and a travel essential – all in one sleek package.

If you’ve ever ventured through any of my blog in the past, you might have noticed the original and unique images — from the whimsically quirky to the ethereally abstract — that I use to pepper my articles. Allow me to introduce you to the mastermind: DALL·E, a marvel from OpenAI’s labs. This neural network isn’t just another tool; it’s a digital maestro, synthesizing visuals from mere textual descriptions. It’s the fusion of code, creativity, and a hint of AI magic that brings those intriguing images to life on my site.

I recently just learned about ChatGPT and GPT-3, two powerful Artificial Intelligence technology tools. And I’m incredibly impressed with what it can do. I wanted to try using it for something that was outside of my usual skillset as a software developer, so I decided to use ChatGPT on my website to generate press releases about recent projects or milestone events. Before this project, the thought of writing a press release had never crossed my mind – not because I didn’t want to but because it’s usually not something developers are expected to have skills in.

Mark Thompson

Collin Rossi - misrconnect.com

Aiden Reardon - www.dalyantekneturlari.com

Van Buckingham - www.jpnn.com

Anastasia Pagan - www.ventura.wiki