Recreating the SpaceX Crew Dragon UI in 60 Days

One developer’s challenge to recreate a conceptual UI/software stack and/with a hardware simulator for the Crew Dragon , in 60 days.

All right, so I know what you’re thinking. Who is this guy using such an ostentatious title to relate his work with the world’s (universe’s?) most innovative company to grace us with their presence? And you’re probably right. But there’s more to it than just a flashy title; I’m here to prove myself, learn about rocketry, hopefully impress the right people, and have some fun along the way.

I’m interested in things that change the world or that affect the future and wondrous, new technology where you see it, and you’re like, ‘Wow, how did that even happen? How is that possible?’ — Elon Musk

I in no way represent myself to have any affiliation with SpaceX, vendors, or competitors; I’m just a loyal fan, and fellow space nerd.

A lot of information may only be briefly mentioned due to content length/time constraints; if there’s anything you’d like to see me take a deeper dive into, be sure to leave a comment!

My Inspiration

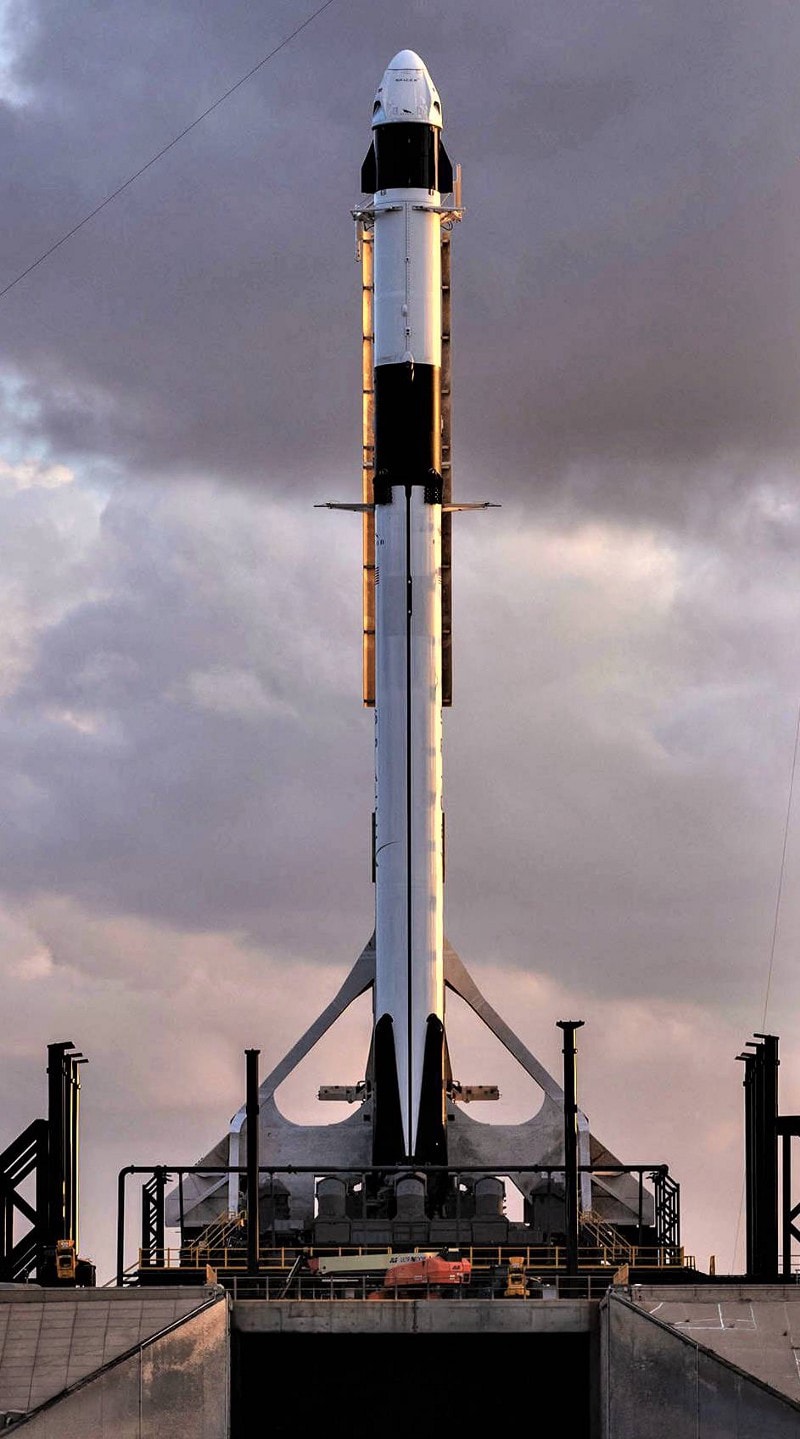

Although a self-proclaimed software engineer, I’m certainly no rocket scientist. My chances of ever getting to experience being a part of “making humans a spacefaring species” had long been accepted as far out of reach for me… Until one morning when I noticed an open position for a Frontend Engineer at SpaceX?!? Could it be? Were we finally at a point of modern, web technology-based UI/UX having its place in outer space?

Knowing it was a total shot in the dark, I applied for the position. However, making it through a few rounds of interviews and getting to speak with some brilliant minds, ultimately, they made no offer due to “other candidates having more directly applicable recent experience.” While deep in reflection for a few days after my candidacy, I realized my role in recent years had pushed me in a direction with more emphasis on backend/support rather than frontend development. I admit it; I became rusty in some crucial areas, which was strongly evident during my screenings. Then it hit me.

If the potential of an opportunity with SpaceX was this important to me, I wanted a chance to apply again in the future as a better technical fit. So why don’t I challenge myself to recreate the UI interface for the Dragon Capsule in the meantime? Better yet, let’s try to pull it all off with a home-lab style hardware simulator; and do it all within 60 days (while attending a full-time job). A bit of a one-person hackathon, if you will.

Persistence is very important. You should not give up unless you are forced to give up. — Elon Musk

Challenge accepted; this is the story.

Photo Credit: SpaceX via Associated Press

Planning, Research & Design

Anyone that’s followed SpaceX over the years knows that their approach to design is to allow for rapid evolution, reiterating until perfection, based on simulations, prototypes, trials, and user feedback loops. All code and components should be structured in a way that anticipates change, whether there’s a change to the design, hardware, vehicle, mission, or end-user; all must abstract into separate modular layers that can adjust independently.

Process and approach must be dynamic and, just like the rockets it’s designed to launch on, all code should be reusable. Every project or task requires a unique, dynamic, and flexible plan; this is no exception. Along the way, my strategy & approach changed many times while attempting to prove my assumptions as false to avoid any self-induced cognitive bias.

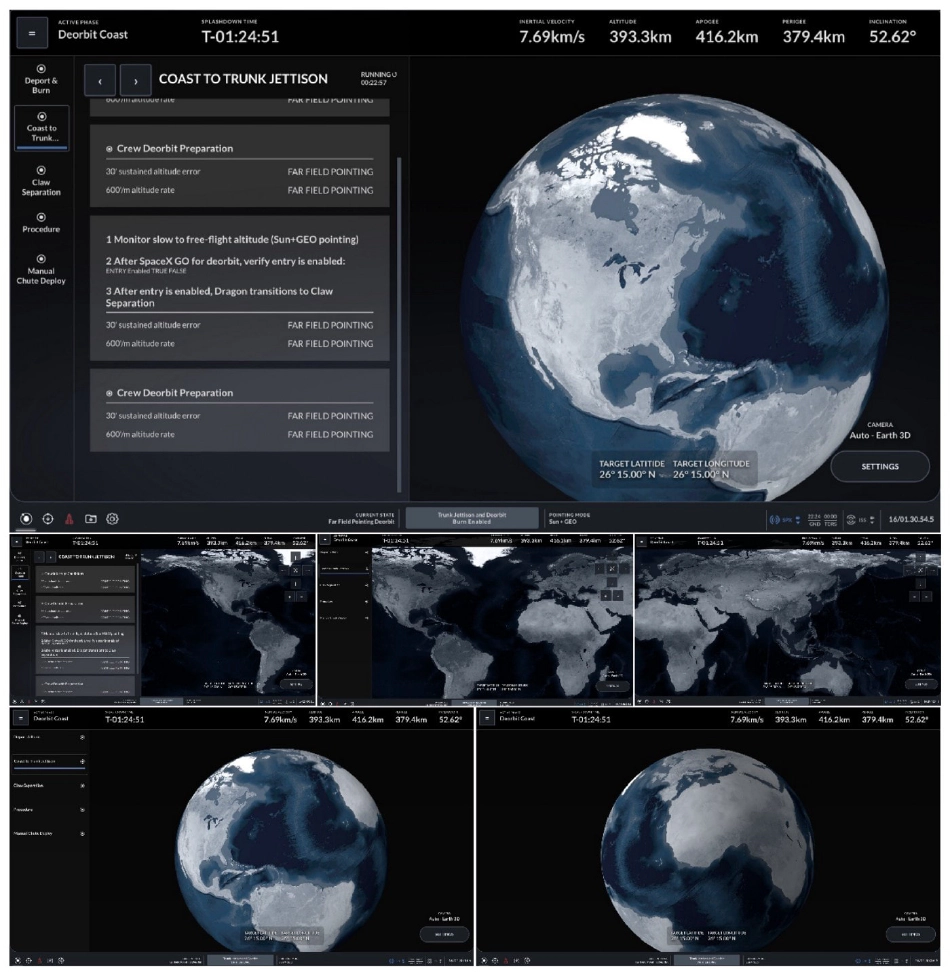

For this project, we are fortunate enough to get to skip through a lot of foundational UI/UX exploration by basing most design decisions on the latest UI revisions seen on recent Dragon launches; This allows us to focus on the main tasks in scope: architecture, structure, components, and reusability.

Although a few main screens have been captured, there still are MANY areas/components that have not been seen publicly and must be based on blurry photos, shaky video frames, and primarily self-researched assumptions.

There’s a total of 25 to 30 individual pages, and SpaceX may have added some more since my flight. With any aircraft or spacecraft, you always iterate because it makes sense and it’s easy and will help the crew. — Doug Hurley

Once we get through the initial task of recreating what we know exists, we can explore conceptual additions and enhancements. (I spent more time than I’d like to admit watching every launch broadcast and documentary I could find, looking for any new possible screen grabs I hadn’t yet seen.)

First, we need to break the project down into a series of smaller tasks and solve them individually, approaching each task as a “minimum marketable feature” that can be later expanded. Although we have a lot to build off, we still have a complex issue needing elegant and straightforward solutions.

Before we can do that, though, we need to know what the project IS. Since we don’t have access to the rockets, BOM, or internal proprietary information, we’re going to need to come up with our own “target device stack.”

Target Device & Software Stack

What is publicly known regarding specific hardware used is few and far between. A lot of this will be hypothetical & based on off-the-shelf hardware to make it more realistic for me to understand, build, deploy and debug.

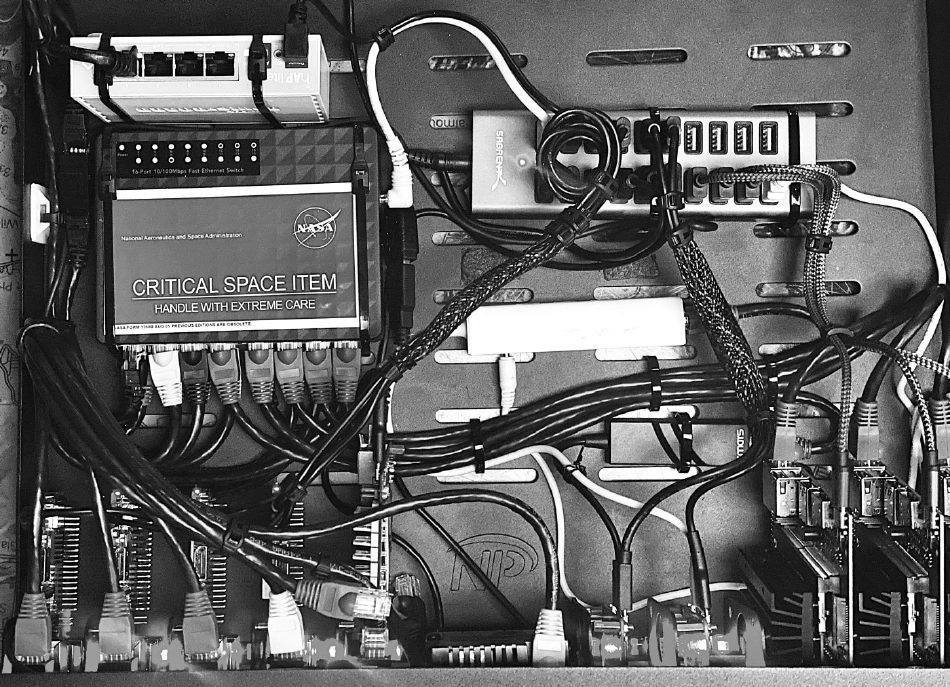

For this project, I’ll be recreating the cockpit UI so that it can interact with simulated rocket functions. A gross oversimplification of the complexities that would be required in a real-world environment. I.e., this will NOT be operating any vehicle and only used for proof-of-concept of UI interacting with theoretical systems/configuration used. It will more-or-less be a simplified recreation of the cockpit simulator pictured above.

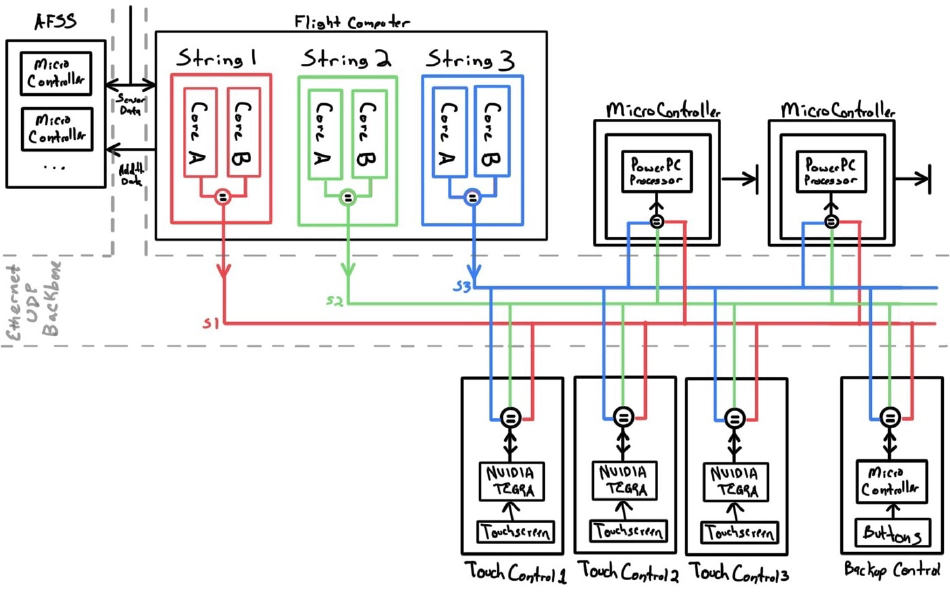

I’ve code-named this stack “MUTANT” (Multiarchitecture UDP-Transport Aided Network Technology). The MUTANT system will consist of 5 main components: Flight Computer, Microcontrollers, Touch Interface, Physical Backup Interface, AFSS (Autonomous Flight Safety System), and network backbone. Also included into my rack but not detailed in this article is a dedicated build server (GIT repo, CI/CD pipeline, and QA test server), NTP server (for time synchronization), Android touch screen (used to trigger simulated events and monitor system status), and Mikrotik wireless access point simulating future onboard Starlink Wi-Fi integration (isolated from any backbone traffic).

Flight Computer

Fault-tolerant, triple redundancy “Data Processing layer.” Consider this both the memory and brain of the vehicle; all sensor data is processed, analyzed and outputs triggered based on logical conditions. The Flight Computer is the “truth” state of the vehicle. If the “truth” in this case being incorrect could result in the injury or loss of human life, triplicated redundancy is a minimal requirement.

Touch Interface Control

Stateless UI touch interface sends commands to flight computer(s) and monitors vehicle systems. Primary control interface for the vehicle and the main focus for this project.

Physical Interface Control

Mission/safety-critical physical interface (buttons) to send commands to flight computer(s)/AFSS; Used as a redundant backup to Touch Interface Control for critical operations (commands such as deorbiting, abort, etc.).

Microcontroller

“PLC” used to control outputs for onboard systems (thrusters, flight control surfaces, etc.), read sensor data inputs (inertial measurement unit, gyro, temperature, pressure, etc.), and stream camera feeds (RTSP). Voter-judge system based on triple redundancy flight strings.

Data Bus

Inner-system communication layer; connecting all hardware components over a singular data bus backbone. Optimized and performance-tuned network stack for optimal operation. If you consider the Flight Computer as the “brain” of the system, then the data bus would be regarded as the “nervous system.”

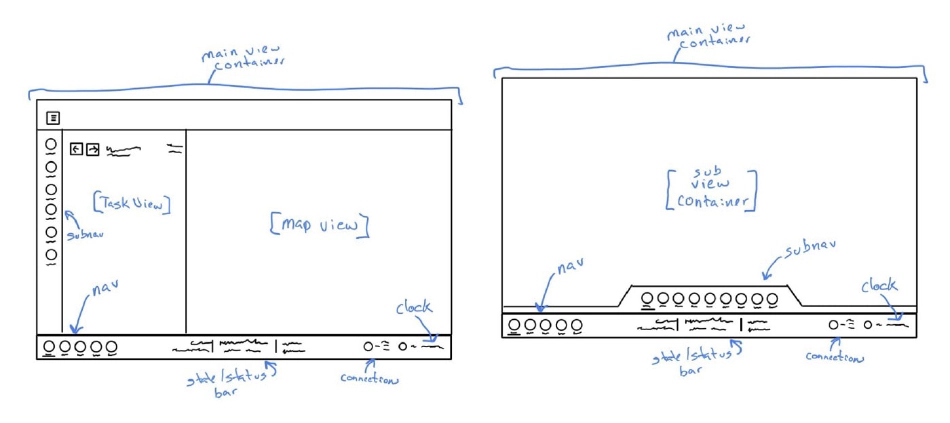

Lo-Fi Wire-Framing

For me, rapid wire-framing is crucial, although we already have the “final” UI to base things on. It allows me to get a “big-picture” idea before architecture and development begin and quickly plan screens/components lacking public imagery. There is no need to get too granular here initially; starting this early in the process is to assist in creating a plan.

Foundational Architecture

Doing my best to closely match my design model and technical approach as closely as I could to what I assume existing systems to be; still resulted in a VERY oversimplified representation of said real-world systems. I hope my assumptions and approach aren’t too flawed; this has been much information to absorb and make rapid development decisions.

Fair warning, this section may dive a bit deeper into technical details than some readers are interested in; feel free to skip to the next section.

All you really need to know for the moment is that the universe is a lot more complicated than you might think, even if you start from a position of thinking it’s pretty damn complicated in the first place.

Flight Computer

This technically could be approached in many different ways; essentially need a “memory cache” and an “event bus” paired with some rudimentary data processing scripts. After exploring a few options, I ultimately chose to go with Redis paired with a custom UDP proxy.

I’m running docker on each flight computer to utilize container isolation and CPU affinity; Running 2 Redis containers pinned to CPUs (Core A = CPU 2, Core B = CPU 3) (Root OS isolated to only using CPUs 0 & 1). Each Core container also contains a UDP to Redis proxy and some data processing scripts using Spring State Machine that calculates values, applies filters, and sets back as new stored key-values.

*Real-time kernel patch applied to reduce latency initially but was later removed due to USB interrupt timing issues with pi’s USB-based onboard ethernet controller. I could overcome this with different SBC architecture, but not critical enough for this project’s scope.

The third container for our Flight Core broker/comparison program: A Redis-CLI client polling specific keys (calculated outputs) from core A & B and comparing results on a 10hz loop; If results match, the UDP datagram posts to Flight String with resulting data; If results do not return the same value, then the Flight Computer is rebooted, and no data posts other than UDP datagram announcing failed data/reboot of Computer; If results timeout, count as failed data and reboot. This program also acts as our watchdog and heartbeat source.

Note: I don’t think this is the official approach taken, but it suits my needs for a quick delivery timeline with a stack that’s familiar to me.

Touch Interface Control

Coming from a background of many JS frameworks/libraries (jQuery, Backbone, AngularJS, Meteor, React, Angular), I could have taken many options with implementation. After digging around what I could find about available information, I determined that I would need something clean, fast to develop, and easy to understand.

Ultimately I chose to go with a combination of Vue.js, THREE.js, LIT Element, WebAssembly, and Electron; Running on 3 NVIDIA Jetson Nanos with custom buildRoot OS.

Physical Backup Control

Initially, I planned to build a custom PCB with membrane buttons to mimic the physical control interface onboard. However, due to time constraints and the main focus on the UI, I simplify things via a StreamDeck over a custom API.

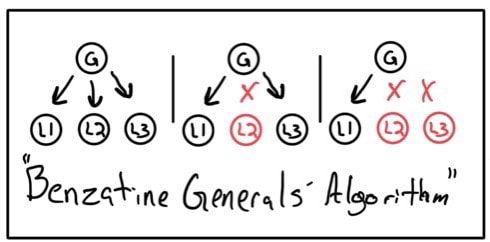

Microcontroller

Lightweight microcontroller running rudimentary flight string comparison algorithm to avoid Byzantine General’s problem at edge node device, paired with triplicated flight strings to handle situations where the computers do not agree. Once the correct flight string is determined, the microcontroller triggers outputs to drive external hardware interfaces (actuator arms, motorized valves, etc.)

Data Bus

Since not a whole lot is publicly known in regards to the communications protocols used onboard falcon/dragon, I’ve had to make some assumptions based on my research. Fortunately MIL-STD-1553 appears only for remote command/control from the ISS and payload integration (maybe a fun addition at a later date, but not a challenge I’m interested in focusing on right now). Ground systems such as the fuel refinery may operate on industrial control standards like Profinet or Modbus , though neither seems adequate for a vehicle control system. CANBUS would be an exciting option but could slow down software development due to hardware components or emulation requirements.

Ultimately I’ve concluded that a standard ethernet backbone is used in a star-topology without ARP, using static IP mapping tables, UDP datagrams for real-time data, and TCP connections for some non-time-critical systems. UDP is excellent for real-time sensor data but can introduce the potential of out-of-order packets. We could explore implementing QUIC for the transport layer with stream multiplexing, though I’m making the call to keep this out of the scope of the project.

The UDP datagram will not exceed 935 bytes to fit into a single mbufs, avoiding the use of 4096-byte clusters. Since even 1 byte over 935 would result in clusters, wasting a significant amount of memory per write . Datagrams are set to a fixed size using byteArrays, allowing for more consistent packet throughput. This also adds a thin layer of “pseudo security” by obfuscation, being slightly more challenging to intercept or inject false data (Also to debug, as I later found out… sigh).

Work like hell. I mean you just have to put in 80 to 100 hour weeks every week. This improves the odds of success. If other people are putting in 40 hour workweeks and you’re putting in 100 hour workweeks, then even if you’re doing the same thing, you know that you will achieve in four months what it takes them a year to achieve. — Elon Musk

UI Components/Library

I made a hard rule to create UI components using pure HTML, CSS/SCSS, SVG graphics, or THREE.js objects. No CSS libraries were used whatsoever during this creation (though definitely times where I had wished that I had at least used an existing grid layout… c’est la vie). All icons, 3D objects, and map textures provided from 3rd parties and cited below.

JS Frameworks

As mentioned earlier, I chose to go with a combination of: Vue.js, THREE.js, LIT Element, WebAssembly, and Electron.

Vue.js

Elements/Views/Routing. Mainly because I havent worked with Vue a whole lot, and it seemed like a great opportunity to learn some more. (PS. I’m now a big fan of Vue. Vue is just what I’ve always wanted from a JS framework: fairly non-opinionated, easy data binding, organized separation of components/views, and best of all, was easy enough for me to understand and work with within a day. I don’t know why I didn’t give Vue more of a chance sooner).

THREE.js

For 3D object/environment rendering. Another new one for me that I’ve been meaning to get more time hands-on with. Working with THREE was initially far more intimidating than I assumed, though I could pull off what I wanted to achieve after taking some quick math refreshers. Several late nights passed attempting a self-guided crash course in orbital mechanics to calculate orbit path based on the latest known velocity vectors, in order to display orbit paths on 3D environment maps. I have more and more respect for current and past SpaceX-ers with every minute I spend on this stuff.

LIT Element

For separation of reusable elements. Another one I’ve never used before but noticed it mentioned in posted job listings. I’ll never turn down an opportunity to learn. Because I’m using Vue, you may wonder why I’m not just using Vue components for this. However, I went this route since I want these UI components to be usable in any framework/codebase (essential when working with multiple teams across multiple architectures and languages).

WebAssembly

High-precision floating-point calculations are handled in c++ wrapped in WebAssembly to help overcome the pitfalls of JavaScript calculations (0.2 + 0.3 = 0.500000000000000004 …😩). This part didn’t get used much yet, but I wanted to demonstrate a case where WebAssembly can be helpful.

Electron

Used for our rendering/webview to display in full-screen kiosk mode without using a browser. Also, an excellent way to package up all assets for a self-contained offline application.

UI Components & Screens

Each of the following comprises of many subcomponents; each configured with exposed parameters, containing no static data, items, or image paths.

Phew! Quick sigh of relief before I realize just how much work is still needed to pull this all together. The further I get, the more I realize how much I don’t know, and the more I question what exactly I signed myself up for 😅. Fear not, though; I’m almost there. It’s time to take this to the finish line finally.

Testing & Refinement

Luckily I’m not responsible for any lives with this project; however, testing should always be taken with the utmost seriousness and respect, ESPECIALLY when dealing with rockets. Test, test, test again, and test some more is always a good mantra to stick with during development.

Don’t delude yourself into thinking something’s working when it’s not, or you’re gonna get fixated on a bad solution. — Elon Musk

To begin, one of the best ways to catch and prevent simple errors early on is to incorporate TypeScript from the start, however only can prevent so much. Automated tests here still are very much our friend being only a 1-man team, but also a good focus on manual tests can be just as important. Not wanting to skip any steps, I set up a suite of basic rudimentary tests that ensure our data provided is always what’s displayed.

It’s always a bit of a conflict having an engineer QA their work; cognitive bias can significantly affect our perception and perspective, so to overcome this, it’s crucial to try to prove as many assumptions here as false. This process helped me cut back on unnecessary weight, realize incorrect assumptions, and discover more efficient ways to approach the same problems resulting in a fair amount of on-the-spot code refactoring.

Although it’s essential not to get too caught up in the details initially to maintain a quick pace during development, ultimately, the day must come where UI elements need to be tightened up, color-matched, etc.

I think it’s very important to have a feedback loop, where you’re constantly thinking about what you’ve done and how you could be doing it better. — Elon Musk

A lot of UI refinement was focused on figuring out the correct color swatches to get things looking as close to correct as possible. Trickier than it may seem, considering that available imageries are photos taken in oddly lit environments and all showing as slightly different colors; plus, I’m sure over time that the colors have changed internally as well.

Though limited by the project scope and a locked-in design, UX refinement is still necessary. Primarily handling non-nominal states for components, assuming what unknown options there may be hidden inside menus, ensuring touch areas are all large enough for touch with gloves, and finally accounting for any weird interaction while interfacing with 3D environments.

Hardware Build

A bit of scope creep came in here, but once I learned about the fundamentals of Dragon’s systems, I was far too fascinated not to attempt my portable home-lab-style recreation. It was arguably the least important use of my time for this project, but it happened to be the most fun. I believe it’s essential to find ways to make every project fun as long as it doesn’t negatively impact timelines. We have to enjoy and be passionate about our work to produce something great. This build was a great distraction for me while hitting roadblocks in code.

When something is important enough, you do it even if the odds are not in your favor. — Elon Musk

Considering all prior research and determining a conceptual hardware stack, I should have all the information needed to create a physical representation of said stack. For the sake of time, most inter-connecting system components are fundamental and cover the minimum requirements to represent the functionality of a complete system.

BOM

| Qty | Device | MSRP | Affiliate Link |

|---|---|---|---|

| 4 | Raspberry Pi 3B+ | $35 | Amazon |

| 1 | LattePanda 2G/32G | $115 | Amazon |

| 2 | Raspberry Pi Zero | $15 | Amazon |

| 3 | Jetson Nano B01 | $199 | Amazon |

| 3 | 1080p 21” Touchscreen | $399 | Amazon |

| 1 | Elgato Stream Deck | $149 | Amazon |

| 1 | Mikrotik HaP Lite | $57 | Amazon |

| 1 | Generic Ethernet Switch | $55 | Amazon |

Final Thoughts

Before taking this project, I had no idea how much I would learn about complex systems, such as GNC, life support, inertial navigation, data bus protocols, etc. Due to limited available screengrabs, I needed to understand how these subsystems worked to guess better what data may be displayed on certain pages. Soaking this all up like a sponge has been a really enjoyable process to discover and understand the complexities of such systems.

I tried my best to keep this article short and sweet, but this is a lot of information to cover and requires a thorough explanation to be conveyed appropriately. If enough interested readers, I may put out a continuation piece; otherwise, I’d be happy to discuss the details further in the comments.

What’s next?

Well, my mind is already thinking about how this all can apply to Starship and evolve into a much larger network of stations and user terminals. I’m feeling a concept project is next up, 👉 follow me to stay tuned.

People should pursue what they’re passionate about. That will make them happier than pretty much anything else. — Elon Musk

*Disclaimer: All information used to direct/influence this project is all publicly accessible and freely available online. No knowledge theft, intellectual property, or internal information gained during interview process was knowingly used in any way for this project. All code is original and produced by Dillon Baird without outside assistance (unless otherwise explicitly stated). The interface design has been recreated by the many shared images online of existing Dragon interfaces, as well as ISS docking simulator; copyright and intellectual property are that of SpaceX. Technical approach was guided loosely based on knowledge shared during Reddit AMA, shared in articles, as well as shared in job listing requirements. Hardware assumptions were based on publicly accessible information, public interviews, NASA certifications, and FCC filings. No ITAR violations are intended by the content of this article.*

Send-Off

Hopefully, I’ve made it clear by now that working for SpaceX would be an absolute dream come true for me. If you or you know anyone working at SpaceX and feel that I’ve represented my technical abilities clearly and could be a positive/impactful teammate, please don’t hesitate to share this article or my contact information. I can’t imagine a better outlet to further pursue my passions for technology, exploration, and the future of humanity.

So long and thanks for all the fish.

Sources/References

(In no particular order)- Building the software that helps build SpaceX

- Where is the technology of SpaceX built by Musk?

- Space Exploration Technologies Corporation March-April 2004 Update

- What Hardware/Software Does SpaceX Use To Power Its Rockets?

- Learning How to Build a Spaceship in 2020 From SpaceX

- ELC: SpaceX lessons learned

- LinuxCon: Dragons and penguins in space

- Displaying HTML Interfaces And Managing Network Nodes… In Space!

- Software Engineering Within SpaceX

- How SpaceX does software for 9 vehicles with only 50 developers — and gov’t requiring 50x the staff

- How SpaceX develops software

- Basics of Space Flight

- Earth-centered, Earth-fixed coordinate system

- Flickr: SpaceX Official Photos

- We are SpaceX Software Engineers — We Launch Rockets into Space — AMA

- We are the SpaceX software team, ask us anything!

- Development of the Crew Dragon ECLSS

- Review of Modern Spacecraft Thermal Control Technologies

- Autonomous Precision Landing of Space Rockets

- Techniques for Fault Detection and Visualization of Telemetry Dependence Relationships for Root Cause Fault Analysis in Complex Systems

- Challenges Using the Linux Network Stack for Real-Time Communication

- Active Thermal Control System (ATCS) Overview

- SpaceX’s Dragon: America’s Next Generation Spacecraft

- SpaceX: Making Commercial Spaceflight a Reality

Recommended Content

(Also, in no particular order)

Last Updated: January 5, 2022

Dillon Baird - DillonBaird.io

perilun

TheWarDoctor

Fluid-Abroad

Dillon Baird - DillonBaird.io

Fluid-Abroad

Raul Hendrix

Steel Bey - steelbeyproductions.com

Amandanismmeldc

NoahJes

TedJes